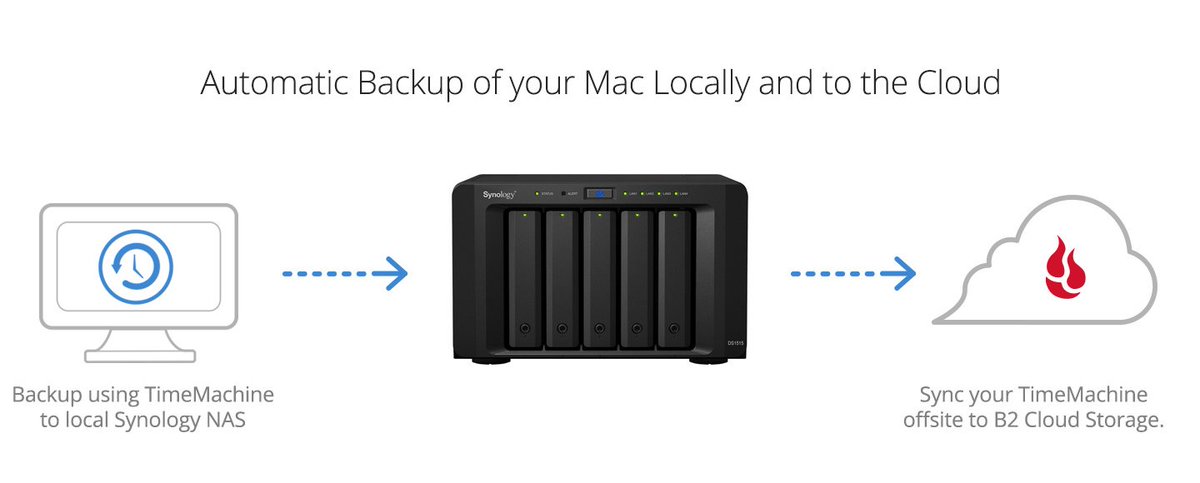

Creating a Hyper Backup job on your personal Synology device.Connecting the Fireball to your network.This guide will cover the following topics: Your internet connection is not fast enough to complete the initial backup in a reasonable amount of time.You do not have a backup in B2 Cloud Storage, but would like to.Synology NAS owners can now create Hyper Backup jobs and use a B2 Fireball as the destination. I like the hard delete option and will use that if I can't get to the root of the issue! Thanks again.B2 Fireball Guide: Creating and Relinking a Hyper Backup Job The directory I'm syncing is a mounted Samba share, for what it's worth. Here's two copies being uploaded just 7 seconds apart in the logs.

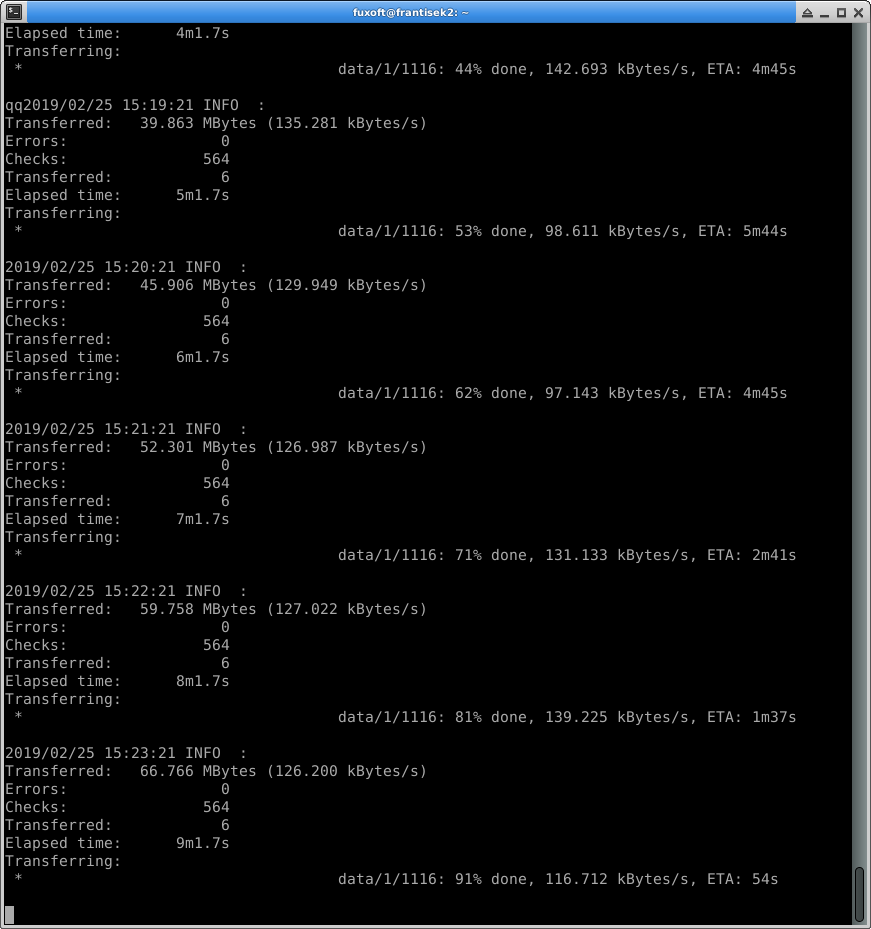

Seems like rclone is maybe doing a few at a time and not accurately keeping track of which ones it's already kicked off? Hence why the one out of order with (13) copies is a larger file, an mp4 video. Each previous version has the same filesize and same Sha1 hash. About a quarter of them have no previous versions but as I keep scrolling the numbers increase, getting to as many as (15) versions. Yet as I scroll down the page when viewing the bucket, files with a higher number in their filename, in general, have more copies.Īll 197 of these files (~800 including duplicates) were uploaded over the span of about 15 minutes, kicking off at that day's cron run. Thanks for the response :) this got me thinking, maybe rclone is erroring out or restarting or something to that effect, because I have one folder where all of the original copies were uploaded on the same day, February 10 2022. So first off, for anyone else who uses rclone + cron, does my script look correct? And second, is there a simple way to avoid duplication that I'm missing? I've noticed that for a lot of the files with many copies such as (30), most of the copies come from the same day, a couple minutes after each other. The root directory even has a random desktop.ini directory with a whopping (449) by it, one copy for every day I've run this by the look of it. I see lots of (2) and (3)s, but sometimes it's (40) or more. I've had this running for a year or two by now, and I've noticed a lot of files appear to have duplicate copies.

Once a day cron kicks off this script: /usr/bin/rclone sync /srv/samba/share/photos remote:fs02-photos -log-file /home/venus/rclone_upload.log -verbose Hey all, I am trying to keep a directory backed up in a Backblaze bucket.

0 kommentar(er)

0 kommentar(er)